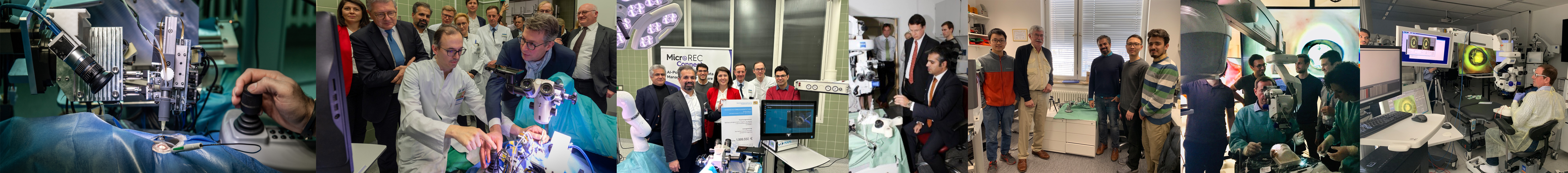

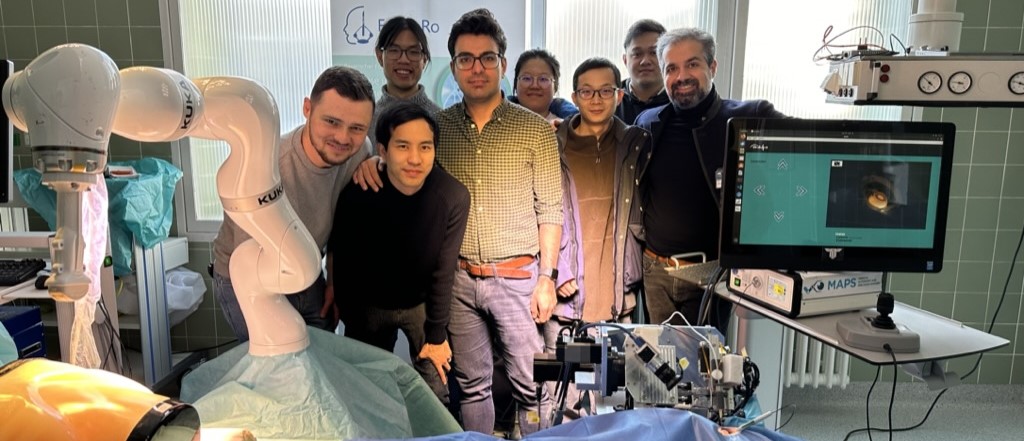

The Medical Autonomy and Precision Surgery lab (MAPS) was established in 2015 to bring advanced technologies to enhance surgical interventions. Since then, MAPS has demonstrated clinical achievements in robotics, AI, computer vision, and machine intelligence. Currently, the main focus of the lab is advancing clinical automation for high precision clinical applications such as ophthalmology.

MAPS multidisciplinary team consists of researchers and students from various desciplines includining medicine, computer science, electrical engineers and mechanical engineering.

The lab offers education and training from undergraduate to graduate level in all relevant disciplines. MAPS collaborates with several national and international research groups, providing exchange programs.

Our Team

PD Dr.-Ing. habil. med. M. Ali. Nasseri

Founding Director of MAPS

Alireza Alikhani

Doctoral Candidate

Lead of the Robotic Team

Korab Hoxha

Doctoral Candidate

Angelo Henriques

Doctoral Candidate

Satoshi Inagaki

Doctoral Candidate

Yinzheng Zhao

Doctoral Candidate

Junjie Yang

Doctoral Candidate

Zhihao Zhao

Doctoral Candidate

News

ICRA 2024:

Our research group will attend the IEEE ICRA conference in Yokohama in 2024. Five papers are accepted and will be presented. Additionally our research group has one best paper award nominations. The videos of these five papers can be seen in the Following:

Fornero

The introduction of robotic assistance systems into the clinical workflow leads to a significant increase in technical, social and organizational complexity in the operating theatre. With ForNeRo, we aim to improve the integration of the systems, taking into account the needs and capacities of the OR staff. This is to be realized with the support of machine learning, simulation, augmented reality and UI technologies. The focus of the investigations is on the optimal placement and use of the robotic systems.

Publications

1. Zhou M, Hennerkes F, Liu J, Jiang Z, Wendler T, Nasseri MA, Iordachita I, Navab N: Theoretical error analysis of spotlight-based instrument localization for retinal surgery. Robotica 2023, 41(5):1536-1549.

2. Zhou M, Guo X, Grimm M, Lochner E, Jiang Z, Eslami A, Ye J, Navab N, Knoll A, Nasseri MA: Needle detection and localisation for robot-assisted subretinal injection using deep learning. CAAI Transactions on Intelligence Technology 2023.

3. Zhao Z, Yang J, Faghihroohi S, Huang K, Maier M, Navab N, Nasseri MA: Label-Preserving Data Augmentation in Latent Space for Diabetic Retinopathy Recognition. In: International Conference on Medical Image Computing and Computer-Assisted Intervention: 2023: Springer; 2023: 284-294.

4. Zhao Z, Faghihroohi S, Yang J, Huang K, Navab N, Maier M, Nasseri MA: Unobtrusive biometric data de-identification of fundus images using latent space disentanglement. Biomed Opt Express 2023, 14(10):5466-5483.

5. Zhao Y, Jablonka A-M, Maierhofer NA, Roodaki H, Eslami A, Maier M, Nasseri MA, Zapp D: Comparison of Robot-Assisted and Manual Cannula Insertion in Simulated Big-Bubble Deep Anterior Lamellar Keratoplasty. Micromachines 2023, 14(6):1261.

6. Yang J, Zhao Z, Shen S, Zapp D, Maier M, Huang K, Navab N, Nasseri MA: EyeLS: Shadow-Guided Instrument Landing System for Intraocular Target Approaching in Robotic Eye Surgery. arXiv preprint arXiv:231108799

2023.

7. Sommersperger M, Dehghani S, Matten P, Mach K, Nasseri MA, Roodaki H, Eck U, Navab N: Semantic Virtual Shadows (SVS) for Improved Perception in 4D OCT Guided Surgery. In: International Conference on Medical Image Computing and Computer-Assisted Intervention: 2023: Springer; 2023: 408-417.

8. Parvareh A, Ibrahimi F, Nasseri MA: Nonlinear swimming magnetically driven microrobot influenced by a pulsatile blood flow through adaptive backstepping control having weight estimation. International Journal of Dynamics and Control 2023:1-12.

9. Maierhofer NA, Jablonka A-M, Roodaki H, Nasseri MA, Eslami A, Klaas J, Lohmann CP, Maier M, Zapp D: iOCT-guided simulated subretinal injections: a comparison between manual and robot-assisted techniques in an ex-vivo porcine model. Journal of Robotic Surgery 2023:1-8.

10. Liang H, Wang T, Xia J, Nasseri MA, Lin H, Huang K: Autonomous Clear Corneal Incision Guided by Force–Vision Fusion. IEEE Transactions on Industrial Electronics 2023.

11. Friedrich J, Sandner A, Nasseri A, Maier M, Zapp D: Accelerated corneal cross-linking (18mW/cm2 for 5 min) with HPMC-riboflavin in progressive keratoconus–5 years follow-up. Graefe's Archive for Clinical and Experimental Ophthalmology 2023:1-7.

12. Dehghani S, Sommersperger M, Zhang P, Martin-Gomez A, Busam B, Gehlbach P, Navab N, Nasseri MA, Iordachita I: Robotic Navigation Autonomy for Subretinal Injection via Intelligent Real-Time Virtual iOCT Volume Slicing. arXiv preprint arXiv:230107204

2023.

13. Alikhani A, Inagaki S, Yang J, Dehghani S, Sommersperger M, Huang K, Maier M, Navab N, Nasseri MA: PKC-RCM: Preoperative Kinematic Calibration for Enhancing RCM Accuracy in Automatic Vitreoretinal Robotic Surgery. IEEE Access 2023.

14. Sommersperger M, Martin-Gomez A, Mach K, Gehlbach PL, Nasseri MA, Iordachita I, Navab N: Surgical scene generation and adversarial networks for physics-based iOCT synthesis. Biomed Opt Express 2022, 13(4):2414-2430.

15. Mach K, Wei S, Kim JW, Martin-Gomez A, Zhang P, Kang JU, Nasseri MA, Gehlbach P, Navab N, Iordachita I: OCT-guided Robotic Subretinal Needle Injections: A Deep Learning-Based Registration Approach. In: 2022 IEEE International Conference on Bioinformatics and Biomedicine (BIBM): 2022: IEEE Computer Society; 2022: 781-786.

16. Friedrich JS, Bleidißel N, Nasseri A, Feucht N, Klaas J, Lohmann CP, Maier M: iOCT in der klinischen Anwendung: Korrelation von intraoperativer Morphologie und postoperativem Ergebnis bei Patienten mit durchgreifendem Makulaforamen. Der Ophthalmologe 2022, 119(5):491.

17. Friedrich JS, Bleidißel N, Nasseri A, Feucht N, Klaas J, Lohmann CP, Maier M: iOCT in der klinischen Anwendung. Der Ophthalmologe 2022, 119(5):491-496.

18. Dehghani S, Sommersperger M, Yang J, Salehi M, Busam B, Huang K, Gehlbach P, Iordachita I, Navab N, Nasseri MA: ColibriDoc: An Eye-in-Hand Autonomous Trocar Docking System. In: 2022 International Conference on Robotics and Automation (ICRA): 2022: IEEE; 2022: 7717-7723.

19. Dehghani S, Busam B, Navab N, Nasseri A: BFS-Net: Weakly Supervised Cell Instance Segmentation from Bright-Field Microscopy Z-Stacks. arXiv preprint arXiv:220604558

2022.

20. Bosch CM, Baumann C, Dehghani S, Sommersperger M, Johannigmann-Malek N, Kirchmair K, Maier M, Nasseri MA: A Tool for High-Resolution Volumetric Optical Coherence Tomography by Compounding Radial-and Linear Acquired B-Scans Using Registration. Sensors 2022, 22(3):1135.

21. Zhou M, Wu J, Ebrahimi A, Patel N, Liu Y, Navab N, Gehlbach P, Knoll A, Nasseri MA, Iordachita I: Spotlight-Based 3D Instrument Guidance for Autonomous Task in Robot-Assisted Retinal Surgery. IEEE Robotics and Automation Letters 2021, 6(4):7750-7757.

22. Sommersperger M, Weiss J, Nasseri MA, Gehlbach P, Iordachita I, Navab N: Real-time tool to layer distance estimation for robotic subretinal injection using intraoperative 4D OCT. Biomed Opt Express 2021, 12(2):1085-1104.

23. Nasseri MA: Autonomy in Ophthalmic Microsurgery: Autonomie in Der Ophthalmologischen Mikrochirurgie. Technische Universität München; 2021.

24. Friedrich JS, Bleidißel N, Nasseri A, Feucht N, Klaas J, Lohmann CP, Maier M: iOCT in clinical use: Correlation of intraoperative morphology and postoperative visual outcome in patients with full thickness macular hole. Der Ophthalmologe: Zeitschrift der Deutschen Ophthalmologischen Gesellschaft 2021.

25. Friedrich J, Bleidißel N, Klaas J, Feucht N, Nasseri A, Lohmann C, Maier M: Large macular hole-Always a poor prognosis? Der Ophthalmologe: Zeitschrift der Deutschen Ophthalmologischen Gesellschaft 2021, 118(3):257-263.

26. Friedrich J, Bleidißel N, Klaas J, Feucht N, Nasseri A, Lohmann C, Maier M: Großes Makulaforamen–immer eine schlechte Prognose? Der Ophthalmologe 2021, 118(3):257-263.

27. Zhou M, Wu J, Ebrahimi A, Patel N, He C, Gehlbach P, Taylor RH, Knoll A, Nasseri MA, Iordachita I: Spotlight-based 3D Instrument Guidance for Retinal Surgery. In: 2020 International Symposium on Medical Robotics (ISMR): 2020: IEEE; 2020: 69-75.

28. Xia J, Bergunder SJ, Lin D, Yan Y, Lin S, Nasseri MA, Zhou M, Lin H, Huang K: Microscope-Guided Autonomous Clear Corneal Incision. In: 2020 IEEE International Conference on Robotics and Automation (ICRA): 2020: IEEE; 2020: 3867-3873.

29. Weiss J, Sommersperger M, Nasseri A, Eslami A, Eck U, Navab N: Processing-aware real-time rendering for optimized tissue visualization in intraoperative 4D OCT. In: Medical Image Computing and Computer Assisted Intervention–MICCAI 2020: 23rd International Conference, Lima, Peru, October 4–8, 2020, Proceedings, Part V 23: 2020: Springer International Publishing; 2020: 267-276.

30. Vander Poorten E, Riviere CN, Abbott JJ, Bergeles C, Nasseri MA, Kang JU, Sznitman R, Faridpooya K, Iordachita I: Robotic Retinal Surgery. In: Handbook of Robotic and Image-Guided Surgery. edn.: Elsevier; 2020: 627-672.

31. Vander Poorten E, Riviere C, Abbott J, Bergeles C, Nasseri M, Kang J, Sznitman R, Faridpooya K, Iordachita I: Handbook of Robotic and Image-Guided Surgery. 2020.

32. Sarhan MH, Nasseri MA, Zapp D, Maier M, Lohmann CP, Navab N, Eslami A: Machine Learning Techniques for Ophthalmic Data Processing: A Review. IEEE Journal of Biomedical and Health Informatics 2020, 24(12):3338-3350.

33. Roodaki H, Maierhofer NA, Jablonka A-M, Nasseri A, Lohmann CP, Eslami A: OCT-based volumetric measurement of subretinal injection blebs in ex-vivo porcine eyes. Investigative Ophthalmology & Visual Science 2020, 61(7):472-472.

34. Roodaki H, Jablonka A-M, Maierhofer NA, Nasseri MA, Lohmann CP, Eslami A: Big-bubble DALK under 4D OCT: an ex-vivo porcine trial. Investigative Ophthalmology & Visual Science 2020, 61(9):PB002-PB002.

35. Maierhofer NA, Jablonka A-M, Roodaki H, Eslami A, Maier M, Nasseri A, Lohmann CP: Implications of robot-assisted subretinal injections guided by intraoperative OCT. Investigative Ophthalmology & Visual Science 2020, 61(7):3715-3715.

36. Maier M, Hattenbach L, Klein J, Nasseri A, Chronopoulos A, Strobel M, Lohmann C, Feucht N: Real-time optical coherence tomography-assisted high-precision vitreoretinal surgery in the clinical routine. Der Ophthalmologe: Zeitschrift der Deutschen Ophthalmologischen Gesellschaft 2020, 117(2):158-165.

37. Maier M, Hattenbach L, Klein J, Nasseri A, Chronopoulos A, Strobel M, Lohmann C, Feucht N: Echtzeit-optische Kohärenztomographie-assistierte Hochpräzisionsvitreoretinalchirurgie in der klinischen Routine. Der Ophthalmologe 2020, 117(2):158-165.

38. Jablonka A-M, Maierhofer NA, Roodaki H, Eslami A, Zapp D, Nasseri A, Lohmann CP: The effect of needle entry angle on bubble formation in DALK using the big-bubble technique. Investigative Ophthalmology & Visual Science 2020, 61(7):1862-1862.

39. Zhou M, Yu Q, Mahov S, Huang K, Eslami A, Maier M, Lohmann CP, Navab N, Zapp D, Knoll A: Towards Robotic-assisted Subretinal Injection: A Hybrid Parallel-Serial Robot System Design and Preliminary Evaluation. IEEE Transactions on Industrial Electronics 2019.

40. Zhou M, Wang X, Weiss J, Eslami A, Huang K, Maier M, Lohmann CP, Navab N, Knoll A, Nasseri MA: Needle Localization for Robot-assisted Subretinal Injection based on Deep Learning. In: 2019 International Conference on Robotics and Automation (ICRA): 2019: IEEE; 2019: 8727-8732.

41. Zhou M, Wang X, Weiss J, Eslami A, Huang K, Maier M, Lohmann CP, Navab N, Knoll A, Nasseri MA: Needle Localization for Robotic Subretinal Injection based on Deep Learning, to be appear. In: 2019 IEEE International Conference on Robotics and Automation (ICRA): 2019; 2019.

42. Zhou M, Lohmann CP, Cerveri P, Nasseri MA: Reducing the Number of Degrees of Freedom to Control an Eye Surgical Robot through Classification of Surgical Phases. In: 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC): 2019: IEEE; 2019: 5403-5406.

43. Zhou M, Hao X, Eslami A, Huang K, Cai C, Lohmann CP, Navab N, Knoll A, Nasseri MA: 6DOF Needle Pose Estimation for Robot-Assisted Vitreoretinal Surgery. IEEE Access 2019, 7:63113-63122.

44. Zhou M, Cheng L, Dell’Antonio M, Wang X, Bing Z, Nasseri MA, Huang K, Knoll A: Peak Temperature Minimization for Hard Real-Time Systems Using DVS and DPM. Journal of Circuits, Systems and Computers 2019, 28(06):1950102.

45. Weiss J, Eck U, Nasseri MA, Maier M, Eslami A, Navab N: Layer-Aware iOCT Volume Rendering for Retinal Surgery. 2019.

46. Nasab MHA: Handbook of robotic and image-guided surgery. In.: Elsevier; 2019.

47. Maier M, Bohnacker S, Klein J, Klaas J, Feucht N, Nasseri A, Lohmann C: Vitrectomy and iOCT-assisted inverted ILM flap technique in patients with full thickness macular holes. Der Ophthalmologe: Zeitschrift der Deutschen Ophthalmologischen Gesellschaft 2019, 116(7):617-624.

48. Maier M, Bohnacker S, Klein J, Klaas J, Feucht N, Nasseri A, Lohmann C: Vitrektomie mit iOCT-assistierter invertierter ILM-Flap-Technik bei großen Makulaforamina. Der Ophthalmologe 2019, 116(7):617-624.

49. Heinrich D, Bohnacker S, Nasseri M, Feucht N, Lohmann C, Maier M: Intraoperative optical coherence tomography in explorative vitrectomy in patients with vitreous haemorrhage—A case series. Der Ophthalmologe 2019, 116:261-266.

50. Heinrich D, Bohnacker S, Nasseri M, Feucht N, Lohmann C, Maier M: Intraoperative optische Kohärenztomographie bei explorativer Vitrektomie an Patienten mit Glaskörperblutung–eine Fallserie. Der Ophthalmologe 2019, 116(3):261-266.

51. Eslami A, Huang K, Cai C, Lohmann CP, Navab N, Knoll A, Nasseri MA: 6DOF Needle Pose Estimation forRobot-assisted Vitreoretinal Surgery, accepted. In: IEEE Access: 2019; 2019.

52. Zhou M, Huang K, Eslami A, Roodaki H, Zapp D, Maier M, Lohmann CP, Knoll A, Nasseri MA: Precision Needle Tip Localization using Optical Coherence Tomography Images for Subretinal Injection, to be appear. In: 2018 IEEE International Conference on Robotics and Automation (ICRA): 2018; 2018.

53. Zhou M, Huang K, Eslami A, Roodaki H, Zapp D, Maier M, Lohmann CP, Knoll A, Nasseri MA: Precision needle tip localization using optical coherence tomography images for subretinal injection. In: 2018 IEEE International Conference on Robotics and Automation (ICRA): 2018: IEEE; 2018: 4033-4040.

54. Zhou M, Hamad M, Weiss J, Eslami A, Huang K, Maier M, Lohmann CP, Navab N, Knoll A, Nasseri MA: Towards Robotic Eye Surgery: Marker-Free, Online Hand-Eye Calibration Using Optical Coherence Tomography Images. IEEE Robotics and Automation Letters 2018, 3(4):3944-3951.

55. Zhou M, Ayad M, Huang K, Maier M, Lohmann CP, Lin H, Navab N, Knoll A, Nasseri MA: Robot Learns Skills from Surgeon for Subretinal Injection. In: 2018 IEEE International Conference on Robotics and Automation (ICRA)-Workshop on Supervised Autonomy in Surgical Robotics: 2018; 2018.

56. Weiss J, Rieke N, Nasseri MA, Maier M, Lohmann CP, Navab N, Eslami A: Injection Assistance via Surgical Needle Guidance using Microscope-Integrated OCT (MI-OCT). Investigative Ophthalmology & Visual Science 2018, 59(9):287-287.

57. Weiss J, Rieke N, Nasseri MA, Maier M, Eslami A, Navab N: Fast 5DOF needle tracking in iOCT. International journal of computer assisted radiology and surgery 2018, 13:787-796.

58. Weiss J, Eslami A, Huang K, Maier M, Lohmann CP, Navab N, Knoll A, Nasseri MA: Towards Robotic Eye Surgery: Marker-free, Online Hand-eye Calibration using Optical Coherence Tomography Images, to be appear. In: IEEE Robotics and Automation Letters (also presented at IROS 2018): 2018; 2018.

59. Thürauf S, Hornung O, Körner M, Vogt F, Knoll A, Nasseri MA: Model-Based Calibration of a Robotic C-Arm System Using X-Ray Imaging. Journal of Medical Robotics Research 2018:1841002.

60. Nasseri MA, Maier M, Lohmann CP: Cannula and Instrument for Inserting a Catheter. In.: Google Patents; 2018.

61. Matinfar S, Nasseri MA, Eck U, Kowalsky M, Roodaki H, Navab N, Lohmann CP, Maier M, Navab N: Surgical soundtracks: automatic acoustic augmentation of surgical procedures. International journal of computer assisted radiology and surgery 2018, 13:1345-1355.

62. Maier MM, Nasseri A, Framme C, Bohnacker S, Becker MD, Heinrich D, Agostini H, Feucht N, Lohmann CP, Hattenbach LO: Die intraoperative optische Kohärenztomografie in der Netzhaut-Glaskörper-Chirurgie. Aktuelle Erfahrungen und Ausblick auf künftige Entwicklungsschritte. Klinische Monatsblätter für Augenheilkunde 2018, 235(09):1028-1034.

63. Maier M, Nasseri A, Framme C, Bohnacker S, Becker M, Heinrich D, Agostini H, Feucht N, Lohmann C, Hattenbach L: Intraoperative optical coherence tomography in vitreoretinal surgery: clinical experiences and future developments. Klinische Monatsblatter fur Augenheilkunde 2018, 235(9):1028-1034.

64. He C-Y, Huang L, Yang Y, Liang Q-F, Li Y-K: Research and realization of a master-slave robotic system for retinal vascular bypass surgery. Chinese Journal of Mechanical Engineering 2018, 31(1):1-10.

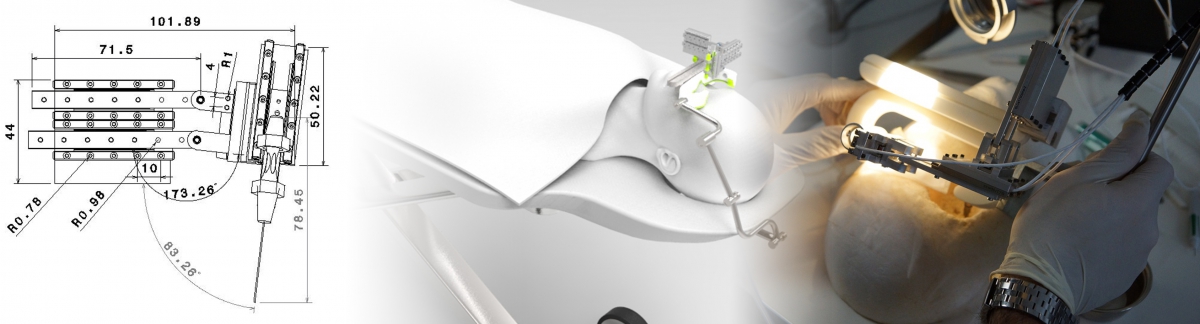

65. Feucht N, Zapp D, Reznicek L, Lohmann CP, Maier M, Mayer CS: Multimodal imaging in acute retinal ischemia: spectral domain OCT, OCT-angiography and fundus autofluorescence. International journal of ophthalmology 2018, 11(9):1521.

66. Borghesan G, Ourak M, Lankenau E, Hüttmann G, Schulz-Hildebrant H, Willekens K, Stalmans P, Reynaerts D, Vander Poorten E: Single scan OCT-based retina detection for robot-assisted retinal vein cannulation. Journal of Medical Robotics Research 2018, 3(02):1840005.

67. Zhou M, Roodaki H, Eslami A, Chen G, Huang K, Maier M, Lohmann CP, Knoll A, Nasseri MA: Needle Segmentation in Volumetric Optical Coherence Tomography Images for Ophthalmic Microsurgery. Applied Sciences 2017, 7(8):748.

68. Zhou M, Huang K, Eslami A, Roodaki H, Lin H, Lohmann CP, Knoll A, Nasseri MA: Beveled needle position and pose estimation based on optical coherence tomography in ophthalmic microsurgery. In: 2017 IEEE International Conference on Robotics and Biomimetics (ROBIO): 2017: IEEE; 2017: 308-313.

69. Thürauf S, Körner M, Vogt F, Hornung O, Nasseri MA, Knoll A: Environment effects at phantom-based X-ray pose measurements. In: 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC): 2017: IEEE; 2017: 1836-1839.

70. Roodaki H, Navab N, Eslami A, Stapleton C, Navab N: Sonifeye: Sonification of visual information using physical modeling sound synthesis. IEEE transactions on visualization and computer graphics 2017, 23(11):2366-2371.

71. Reznicek L, Burzer S, Laubichler A, Nasseri A, Lohmann CP, Feucht N, Ulbig M, Maier M: Structure-function relationship comparison between retinal nerve fibre layer and Bruch's membrane opening-minimum rim width in glaucoma. International Journal of Ophthalmology 2017, 10(10):1534.

72. Pettenkofer M, Nasseri MA, Zhou M, Lohmann C: Retinal Surface Detection in intraoperative Optical Coherence Tomography (iOCT). Investigative Ophthalmology & Visual Science 2017, 58(8):680-680.

73. Patel AJ: Kinematics, Dynamics, and Control of Four-Wheeled Mobile Robot. Texas A&M University-Kingsville; 2017.

74. Nasseri MA, Eslami A, Zapp DM, Bohnacker S, Zhou M, Lohmann C, Maier MM: Intraoperative OCT Guided Robotic Sub-retinal Surgery. Investigative Ophthalmology & Visual Science 2017, 58(8):5003-5003.

75. Nasseri M, Maier M, Lohmann C: A targeted drug delivery platform for assisting retinal surgeons for treating Age-related Macular Degeneration (AMD). In: Engineering in Medicine and Biology Society (EMBC), 2017 39th Annual International Conference of the IEEE: 2017: IEEE; 2017: 4333-4338.

76. Matinfar S, Nasseri MA, Eck U, Roodaki H, Navab N, Lohmann CP, Maier M, Navab N: Surgical soundtracks: Towards automatic musical augmentation of surgical procedures. In: Medical Image Computing and Computer-Assisted Intervention− MICCAI 2017: 20th International Conference, Quebec City, QC, Canada, September 11-13, 2017, Proceedings, Part II 20: 2017: Springer International Publishing; 2017: 673-681.

77. Maroofian R, Riemersma M, Jae LT, Zhianabed N, Willemsen MH, Wissink-Lindhout WM, Willemsen MA, de Brouwer AP, Mehrjardi MYV, Ashrafi MR: B3GALNT2 mutations associated with non-syndromic autosomal recessive intellectual disability reveal a lack of genotype–phenotype associations in the muscular dystrophy-dystroglycanopathies. Genome medicine 2017, 9(1):118.

78. Klein JS, Bohnacker S, Nasseri MA, Feucht N, Lohmann C, Maier MM: Correlation of Intraoperative Morphology and Postoperative Outcome in Patients with Full Thickness Macular Hole (FTMH). Investigative Ophthalmology & Visual Science 2017, 58(8):2792-2792.

79. Huang K, Zhou M, Lajblich C, Lohmann CP, Knoll A, Ling Y, Lin H, Nasseri MA: A flexible head fixation for ophthalmic microsurgery. In: Chinese Automation Congress (CAC), 2017: 2017: IEEE; 2017: 6707-6710.

80. Heinrich D, Bohnacker S, Nasseri MA, Feucht N, Lohmann C, Maier MM: Use of intraoperative Optical Coherence Tomography (i-OCT) in vitreous haemorrhage due to diabetic retinopathy. Investigative Ophthalmology & Visual Science 2017, 58(8):5010-5010.

81. Eslami A, Duca S, Roodaki H, Nasseri MA, Bohnacker S, Zapp DM, Maier MM, Straub J: Incremental Enhancement of Live Intraoperative OCT Scans by Temporal Analysis. Investigative Ophthalmology & Visual Science 2017, 58(8):3124-3124.

82. Duca S, Filippatos K, Straub J, Nasseri MA, Zapp DM, Navab N, Maier MM, Roodaki H, Eslami A: Evaluation of Automatic Following of Anatomical Structures for Live Intraoperative OCT Repositioning. Investigative Ophthalmology & Visual Science 2017, 58(8):4832-4832.

83. Bohnacker S, Nasseri MA, Feucht N, Maier MM, Lohmann C: Intraoperative Optical Coherence Tomography (iOCT) guided intra-and subretinal surgery–a new surgical technique. Investigative Ophthalmology & Visual Science 2017, 58(8):5002-5002.

84. Thürauf S, Wolf M, Körner M, Vogt F, Hornung O, Nasseri MA, Knoll A: A realistic X-ray simulation for C-arm geometry calibration. In: Biomedical Robotics and Biomechatronics (BioRob), 2016 6th IEEE International Conference on: 2016: IEEE; 2016: 383-388.

85. Thürauf S, Vogt F, Hornung O, Körner M, Nasseri MA, Knoll A: Experimental evaluation of the accuracy at the C-arm pose estimation with x-ray images. In: Engineering in Medicine and Biology Society (EMBC), 2016 IEEE 38th Annual International Conference of the: 2016: IEEE; 2016: 3859-3862.

86. Heinrich D, Bohnacker S, Nasseri MA, Feucht N, Lohmann C, Maier MM: Use of intraoperative Optical Coherence Tomography (i-OCT) in vitreous haemorrhage. Investigative Ophthalmology & Visual Science 2016, 57(12):4487-4487.

87. Gijbels A, Willekens K, Esteveny L, Stalmans P, Reynaerts D, Vander Poorten EB: Towards a clinically applicable robotic assistance system for retinal vein cannulation. In: 2016 6th IEEE International Conference on Biomedical Robotics and Biomechatronics (BioRob): 2016: IEEE; 2016: 284-291.

88. Feucht N, Maier MM, Lohmann C, Reznicek L: Evaluation of OCT angiography in detection of Central Serous Chorioretinopathy. Investigative Ophthalmology & Visual Science 2016, 57(12):4958-4958.

89. Bielski A, Lohmann CP, Maier M, Zapp D, Nasseri MA: Graphical user interface for a robotic workstation in a surgical environment. In: 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC): 2016: IEEE; 2016: 5212-5215.

90. Thürauf S, Vogt F, Hornung O, Körner M, Nasseri MA, Knoll A: Tuning of X-ray parameters for noise reduction of an image-based focus position measurement of a C-arm X-ray system. In: Proc of IROS: 2nd Workshop on Alternative Sensing for Robot Perception: Beyond Laser and Vision Hamburg, Germany: 2015; 2015: 13-15.

91. Thürauf S, Hornung O, Körner M, Vogt F, Nasseri MA, Knoll A: Evaluation of a 9D-Position Measurement Method of a C-Arm Based on X-Ray Projections. In: Proc of MICCAI: 1st Interventional Microscopy Workshop Munich, Germany: 2015; 2015: 9-16.

92. Nasseri MA, Zapp D, Lohmann CP, Maier M: Robotic retinal surgery and sub-retinal interventions. Ophthalmologe 2015, 10(2015):S97.

93. Nasseri Ma: Manipulator mit serieller und paralleler Kinematik. In.: DE Patent PCT/DE2013/100,249; 2015.

94. Nasseri M: Hybrid Parallel-Serial Micromanipulator for Assisting Ophthalmic Surgery. Technische Universität München; 2015.

95. Eder M: Compliant Modular Worm-like Robotic Mechanisms with Decentrally Controlled Fluid Actuators. Technische Universität München; 2015.

96. Barthel A, Trematerra D, Nasseri MA, Zapp D, Lohmann CP, Knoll A, Maier M: Haptic interface for robot-assisted ophthalmic surgery. In: 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC): 2015: IEEE; 2015: 4906-4909.

97. Nasseri M, Knoll A, Eder M: Manipulator mit serieller und paralleler kinematik. In.: WO Patent WO2014005583 A1; 2014.

98. Nasseri M, Gschirr P, Eder M, Nair S, Kobuch K, Maier M, Zapp D, Lohmann C, Knoll A: Virtual fixture control of a hybrid parallel-serial robot for assisting ophthalmic surgery: An experimental study. In: Biomedical Robotics and Biomechatronics (2014 5th IEEE RAS & EMBS International Conference on: 2014: IEEE; 2014: 732-738.

99. Mahdizadeh A, Nasseri MA, Knoll A: Transparency optimized interaction in telesurgery devices via time-delayed communications. In: 2014 IEEE Haptics Symposium (HAPTICS): 2014: IEEE; 2014: 603-608.

100. Knoll A, Nasseri MA, Eder M: Manipulator with serial and parallel kinematics. 2014.

101. Knoll A, Nasseri MA, Eder M: Manipulator mit serieller und paralleler Kinematik. 2014.

102. Farzaneh MH, Nair S, Nasseri MA, Knoll A: Reducing communication-related complexity in heterogeneous networked medical systems considering non-functional requirements. In: 16th International Conference on Advanced Communication Technology: 2014: IEEE; 2014: 547-552.

103. Ashraf A, Zarei F, Hadianfard MJ, Kazemi B, Mohammadi S, Naseri M, Nasseri A, Khodadadi M, Sayadi M: Comparison the effect of lateral wedge insole and acupuncture in medial compartment knee osteoarthritis: a randomized controlled trial. The Knee 2014, 21(2):439-444.

104. Ashraf A, Farahangiz S, Jahromi BP, Setayeshpour N, Naseri M, Nasseri A: Correlation between radiologic sign of lumbar lordosis and functional status in patients with chronic mechanical low back pain. Asian spine journal 2014, 8(5):565.

105. Wu J, Nasseri MA, Eder M, Azqueta Gavaldon M, Lohmann C, Knoll A: The 3d eyeball FTA model with needle rotation. In: APCBEE Procedia 3rd International Conference on Biomedical Engineering and Technology, ICBET 2012: 2013: Elsevier; 2013.

106. Nasseri MA, Eder M, Nair S, Dean E, Maier M, Zapp D, Lohmann C, Knoll A: The introduction of a new robot for assistance in ophthalmic surgery. In: Engineering in Medicine and Biology Society (EMBC), 2013 35th Annual International Conference of the IEEE: 2013: IEEE; 2013: 5682-5685.

107. Nasseri MA, Eder M, Eberts D, Nair S, Maier M, Zapp D, Lohmann C, Knoll A: Kinematics and dynamics analysis of a hybrid parallel-serial micromanipulator designed for biomedical applications. In: Advanced Intelligent Mechatronics (AIM), 2013 IEEE/ASME International Conference on: 2013; 2013.

108. Nasseri M, Eder M, Nair S, Dean E, Maier M, Zapp D, Lohmann C, Knoll A: 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC). 2013.

109. Nair S, Nasseri MA, Eder M, Lohmann C, Knoll A: Embedded Middleware and Hard Real-time based Architecture for Robot Assisted Ophthalmic Surgery. In: The Hamlyn Symposium on Medical Robotics: 2013; 2013: 83.

110. Maier M, Nasseri MA, Zapp D, Eder M, Kobuch K, Lohmann C, Knoll A: Robot-assisted vitreoretinal surgery. Investigative Ophthalmology & Visual Science 2013, 54(15):3318.

111. Maier M, Nasseri MA, Zapp D, Eder M, Kobuch K, Lohmann C, Knoll A: Robot-assisted vitreoretinal surgery. The Association for Research in Vision and Ophthalmology (ARVO 2013) 2013.

112. Ali NM, Lohmann CP, Knoll A: Robot for assisting Ophthalmic Surgery. In: Proceedings of the International Society for Medical Innovation and Technology (iSMIT): 2013; 2013.

113. Ali NA, Salim SIM, Rahim RA, Anas SA, Noh ZM, Samsudin SI: PWM controller design of a hexapod robot using FPGA. In: 2013 IEEE International Conference on Control System, Computing and Engineering: 2013: IEEE; 2013: 310-314.

114. Rashidi H, Sulaiman NN, Hashim N: Available online at www. sciencedirect. com. Procedia Engineering 2012, 44:2010-2012.

115. Pak M, Nasseri A: Load-velocity Characteristics of a Stick-slip Piezo Actuator. Proceedings der Actuator 2012 2012:P. 755.

116. Nasseri MA, Dean E, Nair S, Eder M, Knoll A, Maier M, Lohmann CP: Clinical motion tracking and motion analysis during ophthalmic surgery using electromagnetic tracking system. In: 2012 5th International Conference on BioMedical Engineering and Informatics: 2012: IEEE; 2012: 1058-1062.

117. Nasseri MA, Asadpour M: Control of flocking behavior using informed agents: an experimental study. In: 2011 IEEE Symposium on Swarm Intelligence: 2011: IEEE; 2011: 1-6.

118. Anderson M, Fierro R, Naseri A, Lu W, Ferrari S: Cooperative navigation for heterogeneous autonomous vehicles via approximate dynamic programming. In: Proc IEEE Conf Decision and Control: 2011; 2011.

119. Meining A, Atasoy S, Chung A, Navab N, Yang G: “Eye-tracking” for assessment of image perception in gastrointestinal endoscopy with narrow-band imaging compared with white-light endoscopy. Endoscopy 2010, 42(08):652-655.

120. Masehian E, Naseri A: Mobile robot online motion planning using generalized Voronoi graphs. 2010.

121. Arvin F, Samsudin K, Nasseri MA: Design of a differential-drive wheeled robot controller with pulse-width modulation. In: Innovative Technologies in Intelligent Systems and Industrial Applications, 2009 CITISIA 2009: 2009: IEEE; 2009: 143-147.

122. Wu J, Nasseri M, Eder M, Gavaldon M, Lohmann C, Knoll A, Boukhenous S, Attari M, Remram Y, Skupch A: Available online at www. sciencedirect. com.

123. UK SD, Walker P, Amiri S, Masri B, Anglin C, Wilson D, Blakeney W, Khan R, Palmer J, Park I: Arthroscopy in the management of knee osteoarthritis.

124. Thürauf S, Hornung O, Körner M, Vogt F, Nasseri MA, Knoll A: Absolute accurate calibration of a robotic C-arm system based on X-ray observations using a kinematic model. In: Proc of ICRA: Workshop on C4 Surgical Robots: Compliant, Continuum, Cognitive, and Collaborative,(Singapore, Singapore, 2017): 43-46.

125. Nasseri MA: Modeling and analysis of a micro-manipulator for vitreo retinal surgery.

126. Hoxha K, Alikhani A, Inagaki S, Ferle M, Maier M, Nasseri MA: Modelling and Development of a Mechanical Eye for the Evaluation of Robotic Systems for Surgery.

127. Alikhani A, Oßner S, Dehghani S, Busam B, Inagaki S, Maier M, Navab N, Nasseri MA: RCIT: A Robust Catadioptric-based Instrument 3D Tracking Method For Microsurgical Instruments In a Single-Camera System.